Robot Search and Rescue

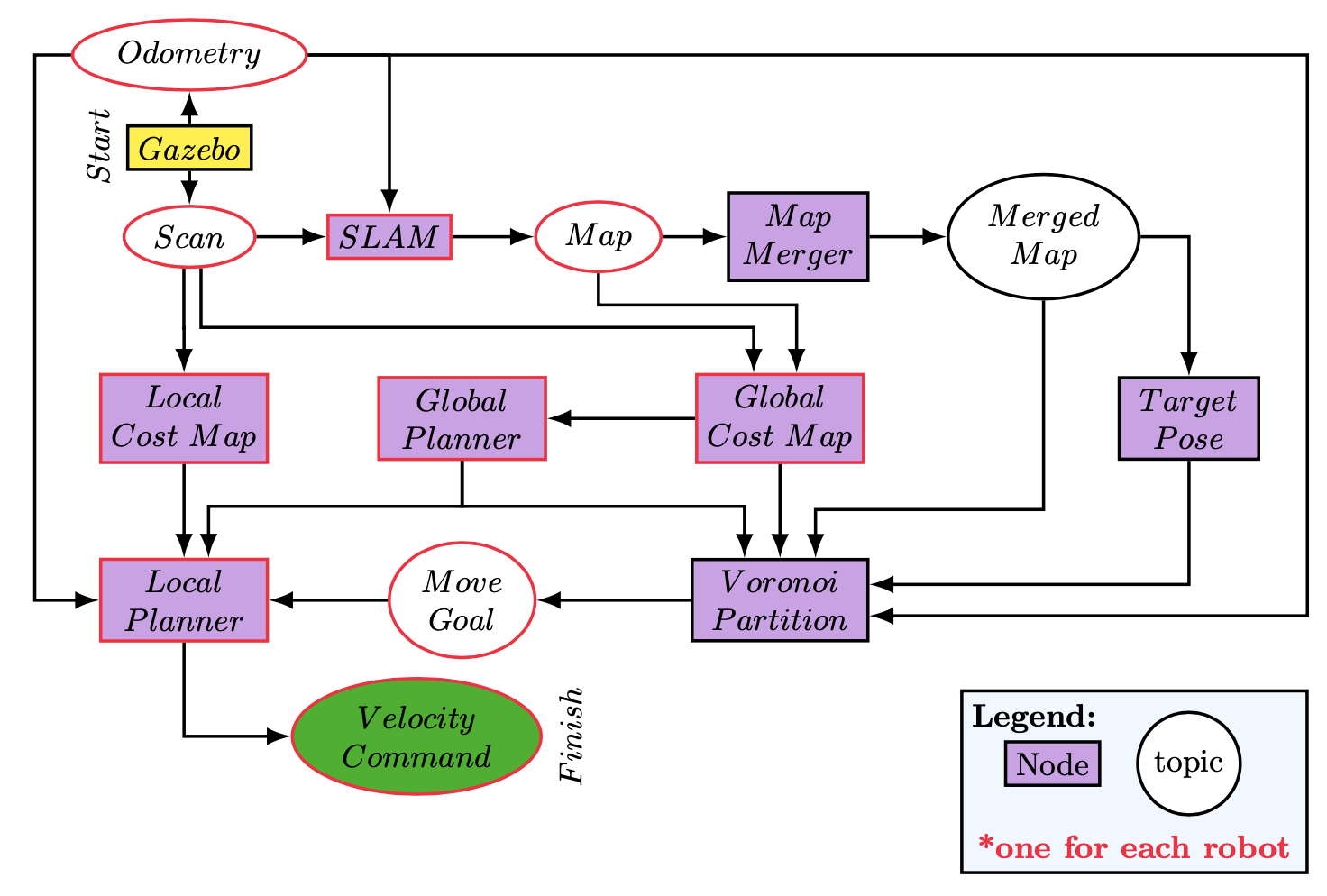

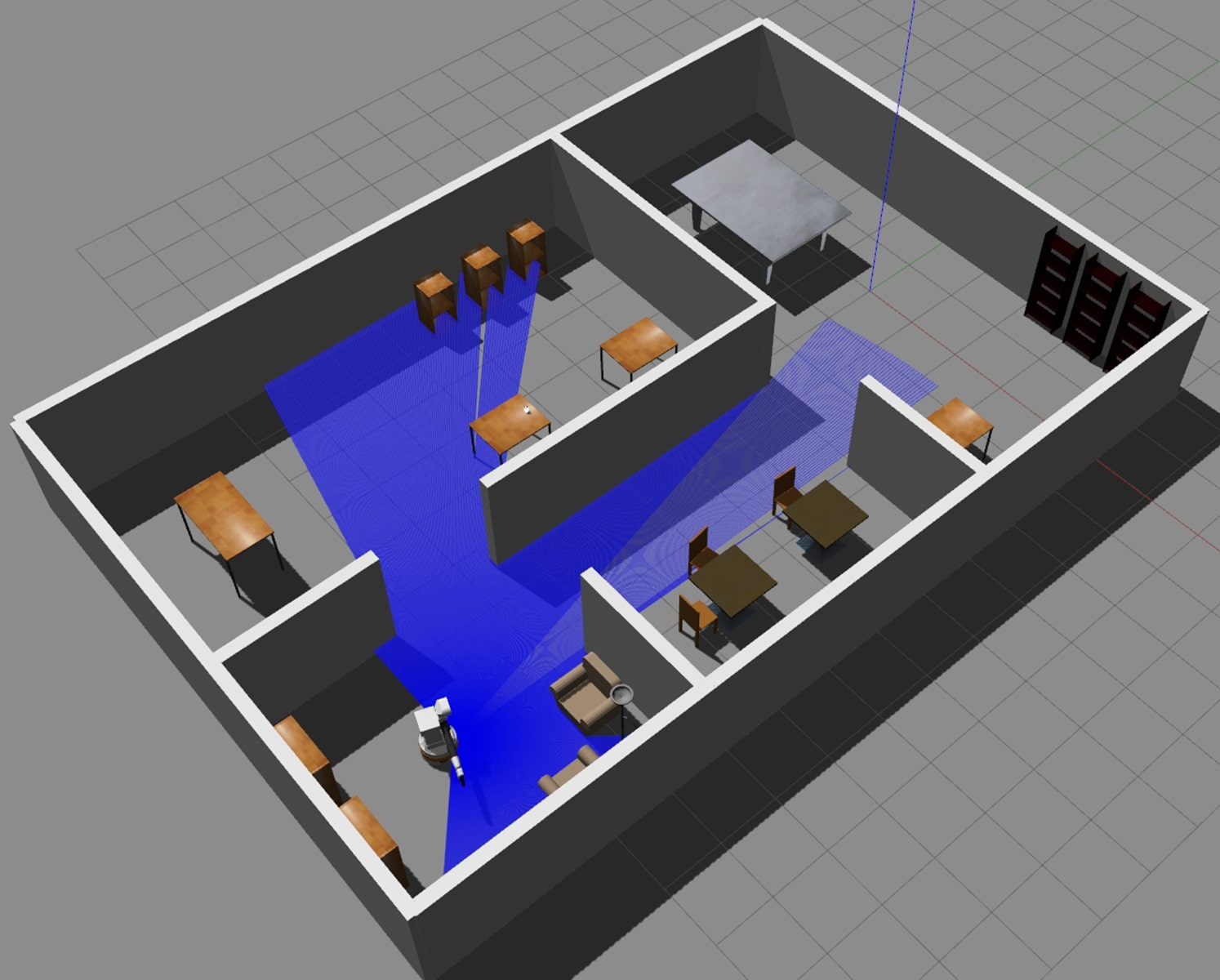

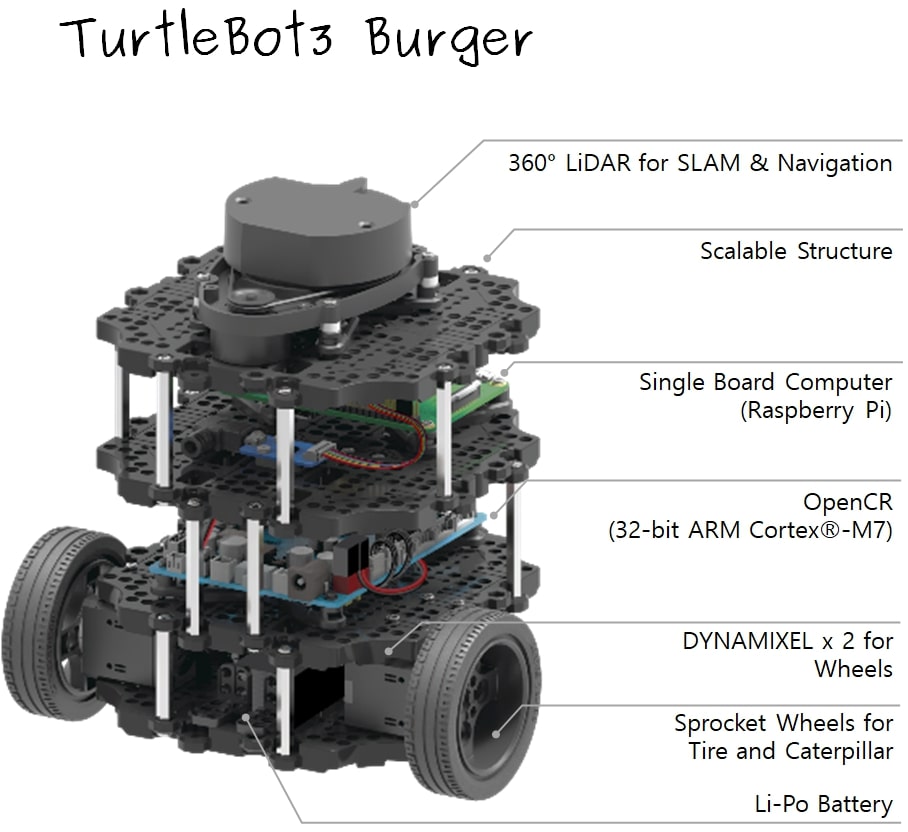

This project involved searching an unknown environment for a target using cooperative robots. The robots, which had no prior knowledge of the environment, needed to collaboratively explore to find and rescue the target. The project was written in Python/C++ and used Robot Operating System (ROS) along with Gazebo as the simulation engine. The robots were the turtlebot3 burger model with two drivable wheels and a lidar sensor.

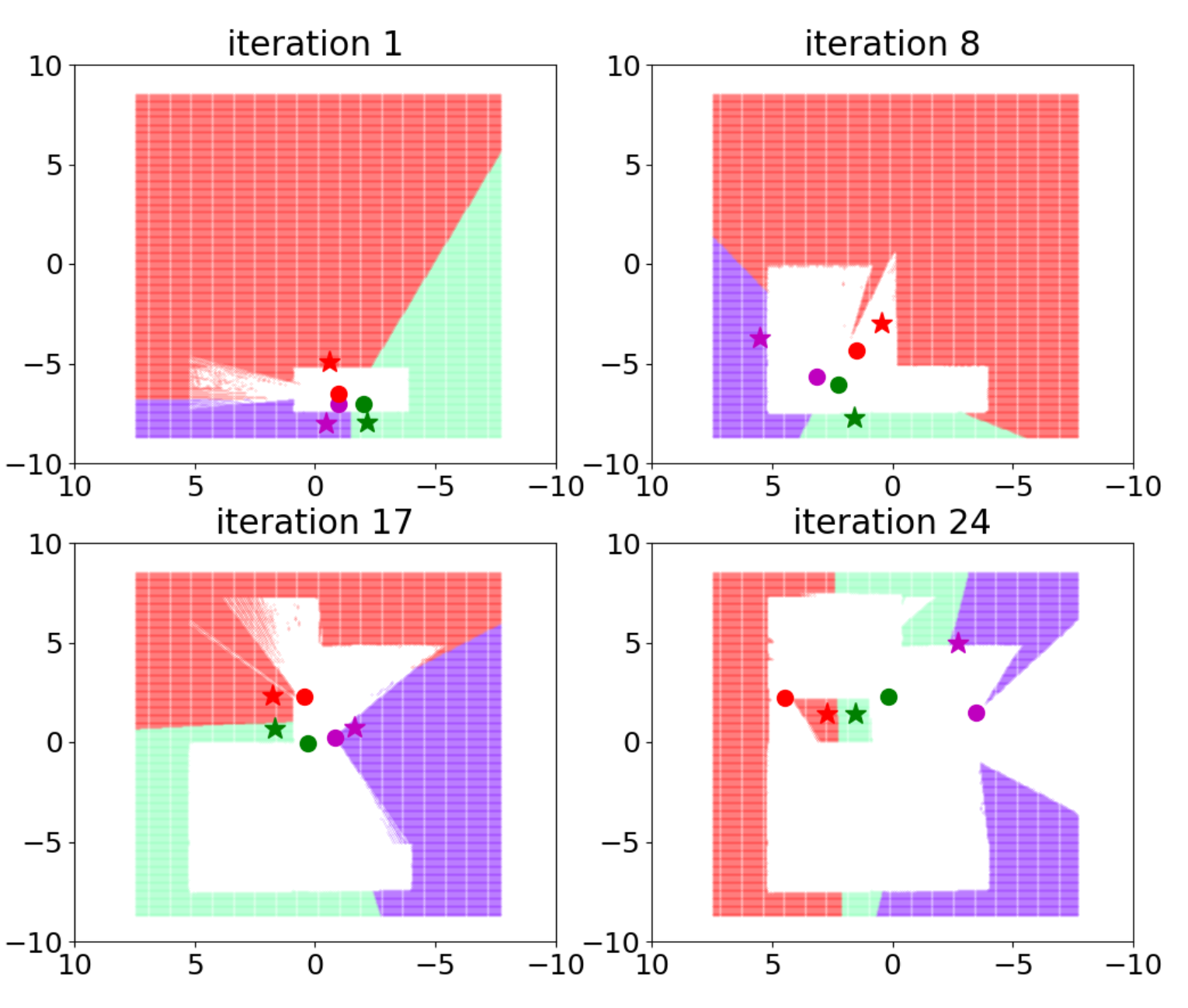

The unknown map had obstacles, and the robots started close to each other at one side of the map. I used SLAM gmapping for localization and mapping and implemented a cooperative exploration approach using dynamic Voronoi partitions. The robots continued to explore the map cooperatively until the target was found. Once the target was found, the density function changed to attract the robots to go and rescue it.

The explored map was shared between the robots to minimize duplication, and I used the move base package for cost maps and local/global planning. Global planning used A* and the local collision avoidance used DWA.

For a deeper dive into the project, check out the GitHub repository: [Link]. Check out the report here: [Link].

Robot Evacuation Mission Planning

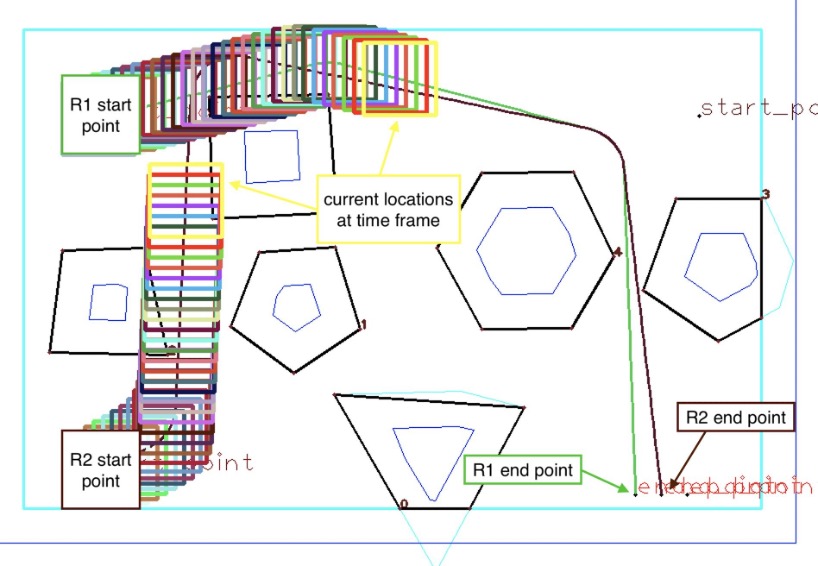

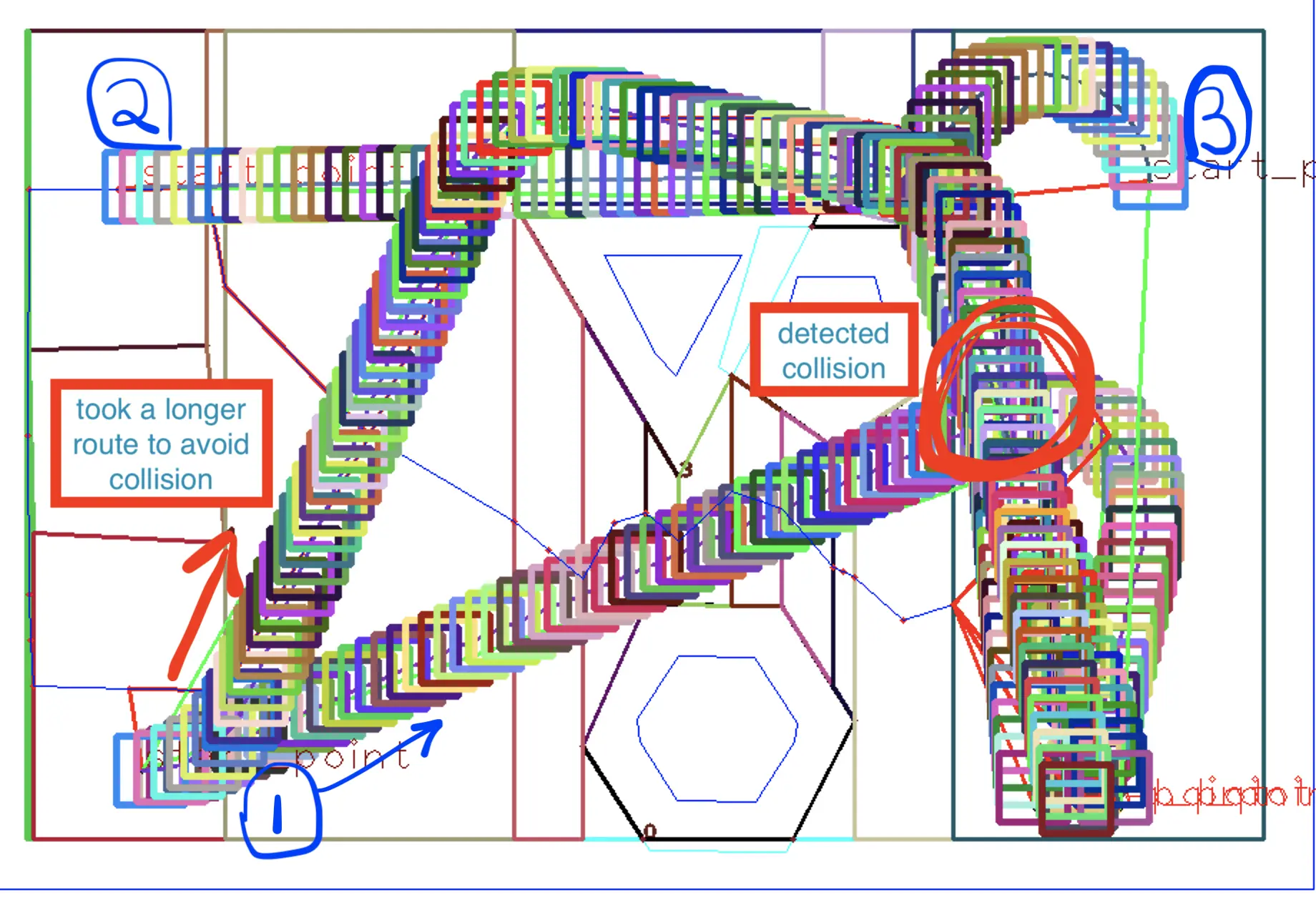

The project's goal was to evacuate three robots from a room filled with numerous obstacles as quickly as possible without collision. The robots' initial positions, the gate's location, and the number/location of obstacles were randomly determined. The solution was entirely written in C++ and used Robot Operating System (ROS).

I based the solution on constructing a roadmap using the vertical cell decomposition algorithm. The path for each robot was then determined using a breadth-first search and later optimized to eliminate redundant movements. After finding all paths for the robots, we recursively checked for collision detection between the robot paths and adjusted them accordingly.

The optimization process involved looking ahead to future points on the path. Each point could examine the upcoming points (up to a defined limit), comparing the distances between itself and each point on the horizon. The shortest distance determined the next point we would jump to, provided that no collision was detected.

Further details, including the full code, are available on the GitHub repository: [Link].

Robot Mission Planner Simulation

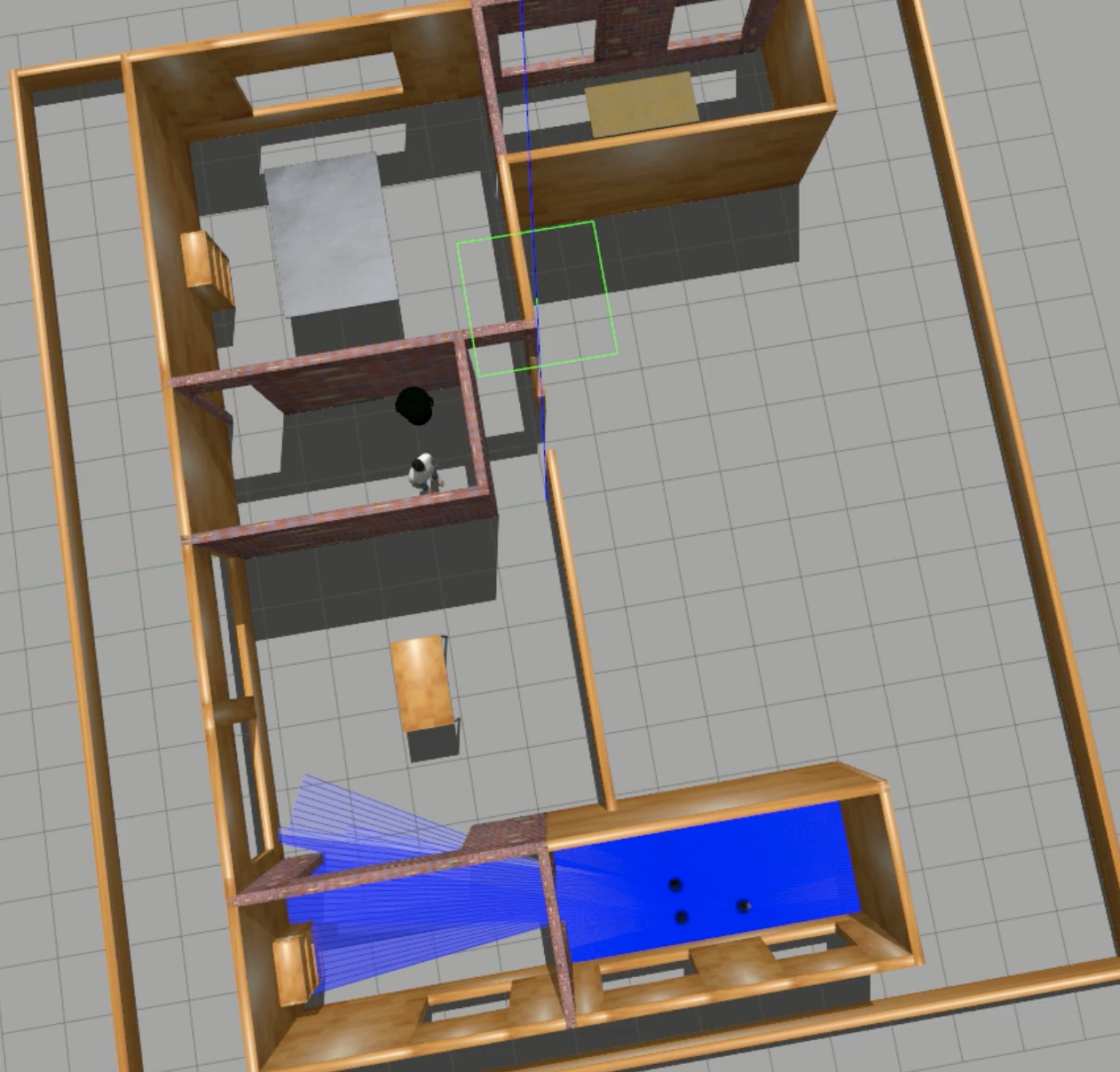

This project was a Gazebo simulation of a TIAGo robot localizing in a previously mapped apartment. The robot was equipped with several onboard sensors and a manipulator, which allowed it to navigate and interact with the environment through simple manipulation tasks. The robot had modules for path planning, control, localization, sensing, and manipulation.

The primary mission was to navigate to a specific location to pick up an object, then transport it to a different target location. We utilized the available sensors and actuator through the software packages provided to accomplish this goal. The simulation used the Robot Operating System (ROS).

During the localization step, the robot spun around to gather as much information as possible from the environment using its sensors. We used a particle filter for localization. ARUCO markers were used to find the cube's pose, which the robot could target with its manipulator once it could see the marker.

State Machine and Behavior Tree were both used as models for the robot's behavior, and the robot had the capability to recognize when it was kidnapped and re-localize when it occurred.

Further details, including the full code, are available on the GitHub repository: [Link]. You can also view a video showcasing the project on YouTube: [Link].

Perception Sensor Fusion

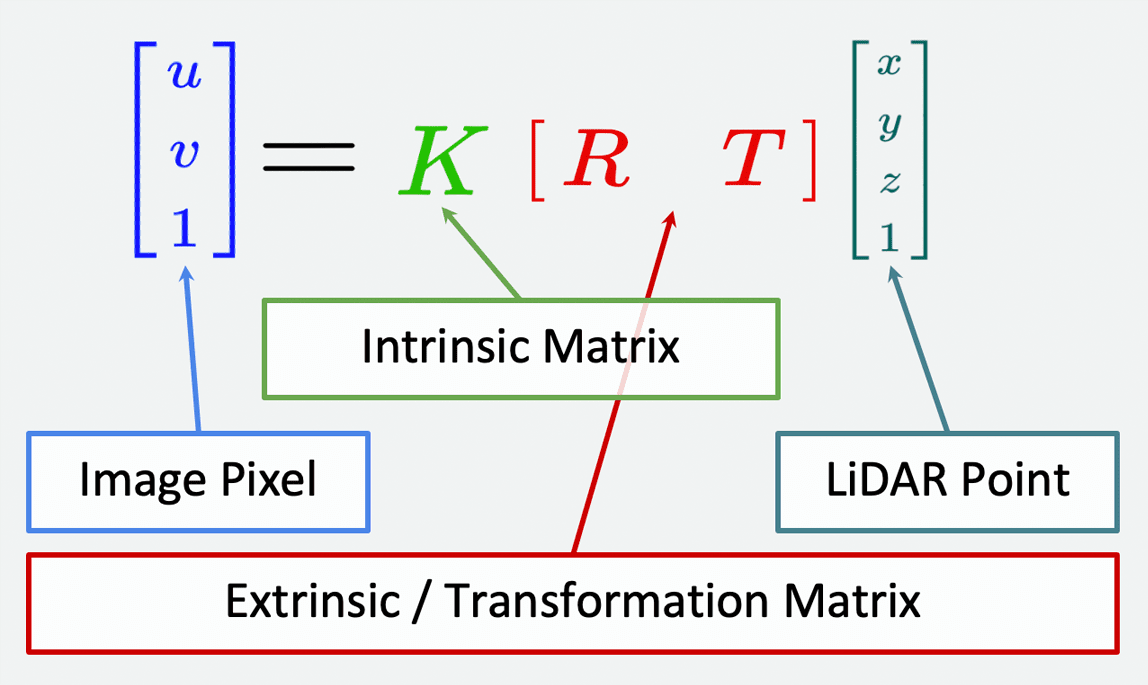

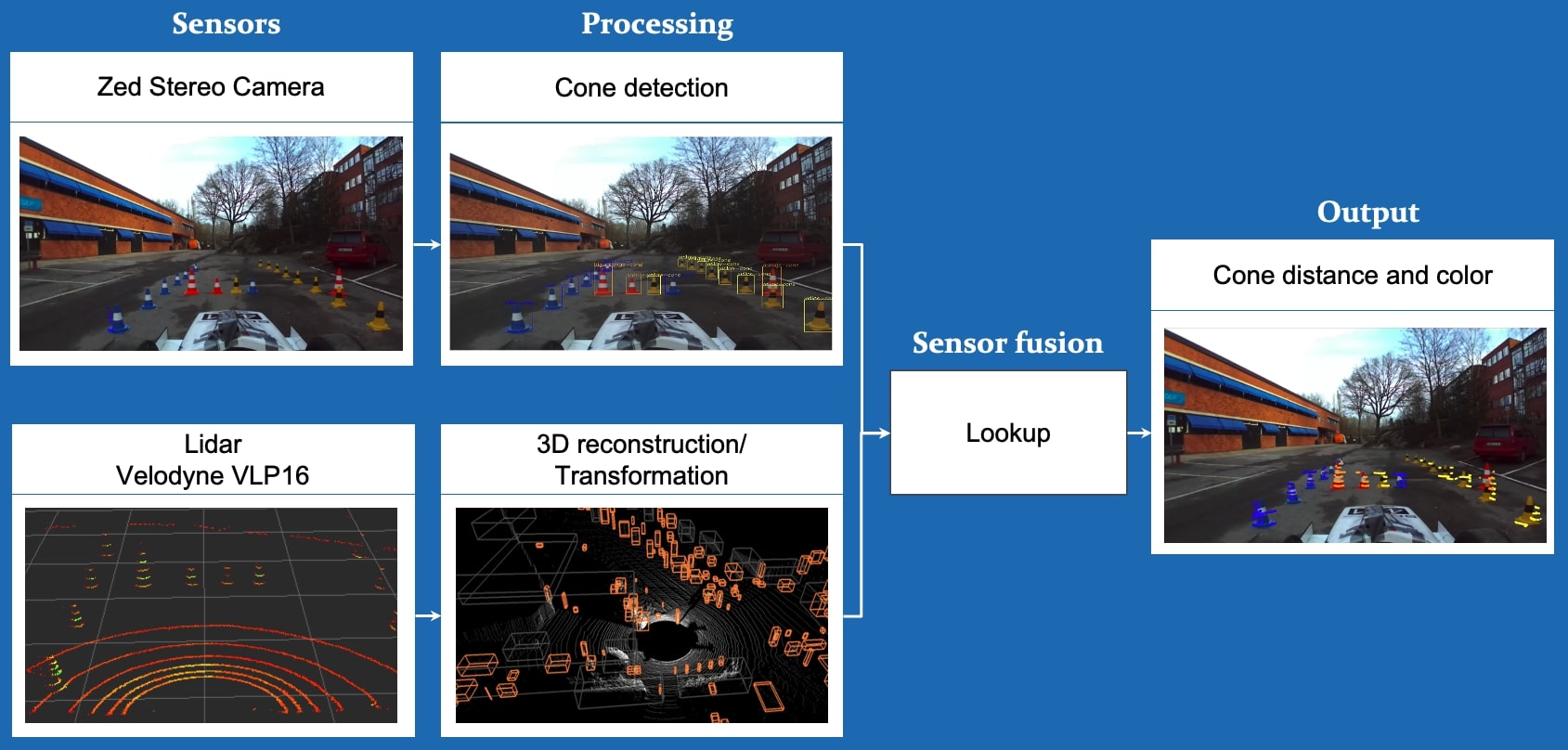

In my role as a member of the Driver-less Formula team, I focused on fusing the input from the camera and lidar sensors. We used a Zed stereo camera and a Velodyne VLP-16, with an Nvidia Xavier as a GPU, and the Driver-less pipeline utilized the Robot Operating System (ROS).

YOLO (You Only Look Once), a real-time object detection CNN, was used to process the Zed camera input. This identified the cones and provided the color and bounding box points locations. The point cloud output from the Lidar, transformed to the camera image frame, was used to accurately determine the distance to the cones. This process involved computing the intrinsic and extrinsic matrices, which we calculated using Matlab's camera calibration toolbox.

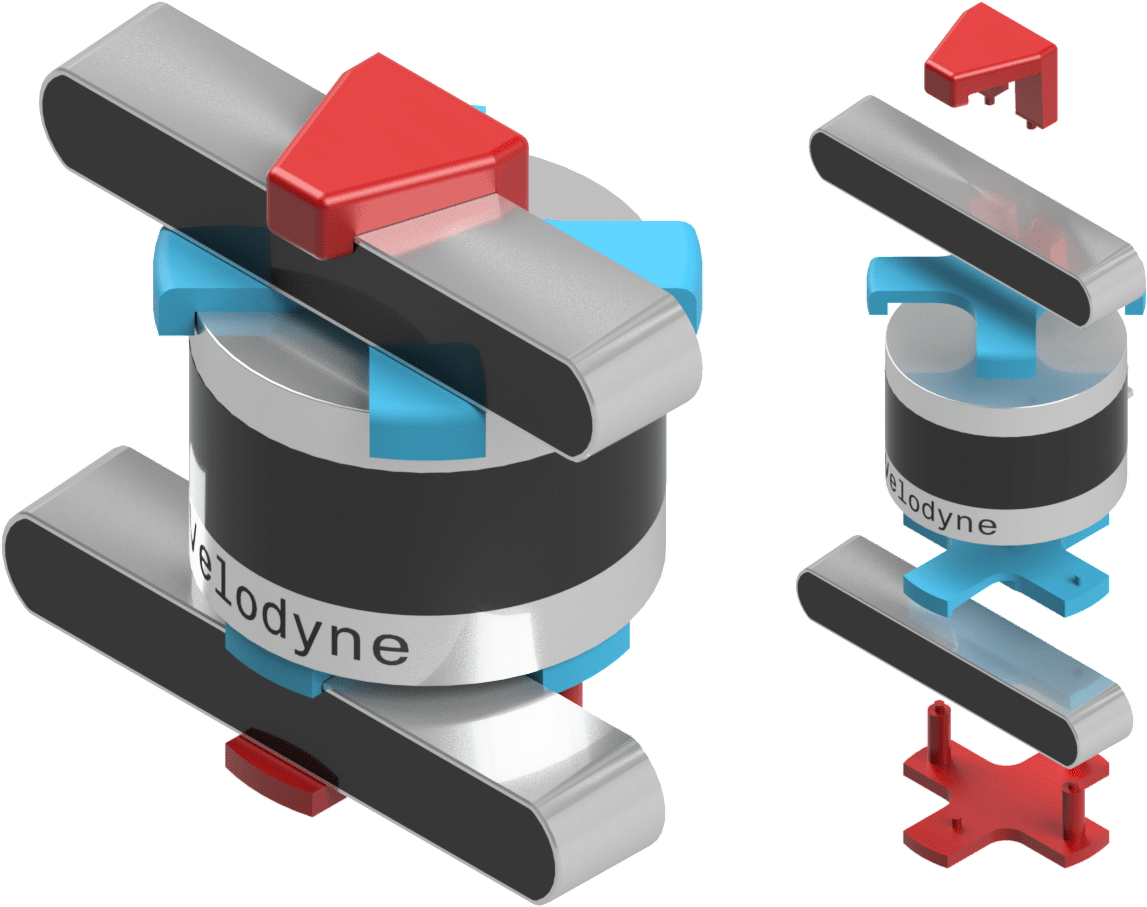

Ultimately, our output included the location, distance and color of all cones, along with a time stamp and the covariance matrices. This was fed into the next step in the pipeline, SLAM. Additionally, we designed and 3D printed a bracket holder to connect the lidar and camera together for consistent locating during calibration procedures.

Optimization Based Robot Control

I took an elective class in my master's program that covered a wide range of robot control methods and algorithms. This included reactive control methods in both joint and task spaces, with a focus on PID, inverse dynamics, operational space control, task space inverse dynamics, and impedance control for motion control.

We explored optimization-based control using quadratic programs in inverse dynamics, covering applications such as under-actuation and rigid contact. We also covered multiple methods for solving optimal control problems, such as dynamic programming and direct methods [single shooting, multiple shooting, collocation].

One of the key topics was the Linear Quadratic Regulator (LQR) as a special optimal control problem. We expanded this into a nonlinear problem using Differential Dynamic Programming (DDP).

Model Predictive Control (MPC) was another important method we studied for finding a reference trajectory and providing a feedback loop to follow it. We also tackled the challenges of stability and recursive feasibility.

The course included Reinforcement Learning algorithms that follow a Markovian structure (Markov Decision Process). We discussed prediction methods such as Monte-Carlo and Temporal Difference [TD0,TD(λ)], and control methods such as Q-learning and SARSA. We briefly touched on value approximations, such as Deep Q Networks, which I implemented as the main project for the course.

Robot Exploration Simulation

The objective of this project was to create a Gazebo simulation of a turtlebot using Robot Operating System(ROS). The exploration was done based on a strategy called receding horizon "next-best-view" (RH-NBV).

Collision avoidance was implemented based on the obstacle-restriction method (ORM) and pure pursuit. For localization, we used Hector SLAM for mapping and to localize the robot in its environment.

A ROS controller node was created to combine the exploration, SLAM, and collision avoidance nodes to move the robot around in the environment.

6 Axis Robot Arm

This project involved the construction of a 6-axis robot arm using a 3D printer, servo motors, and an Arduino Mega with a RepRap shield and servo drives.

The next step in the development process is to build a ROS interface to control the servos using inverse kinematics. We also plan to add a vision system using OpenCV to identify targets, and enable the robot arm to pick up and deliver payloads.

You can view a timeline of pictures of the robot via this link: [Link]. A demonstration of the project can be seen in this video: [Link].

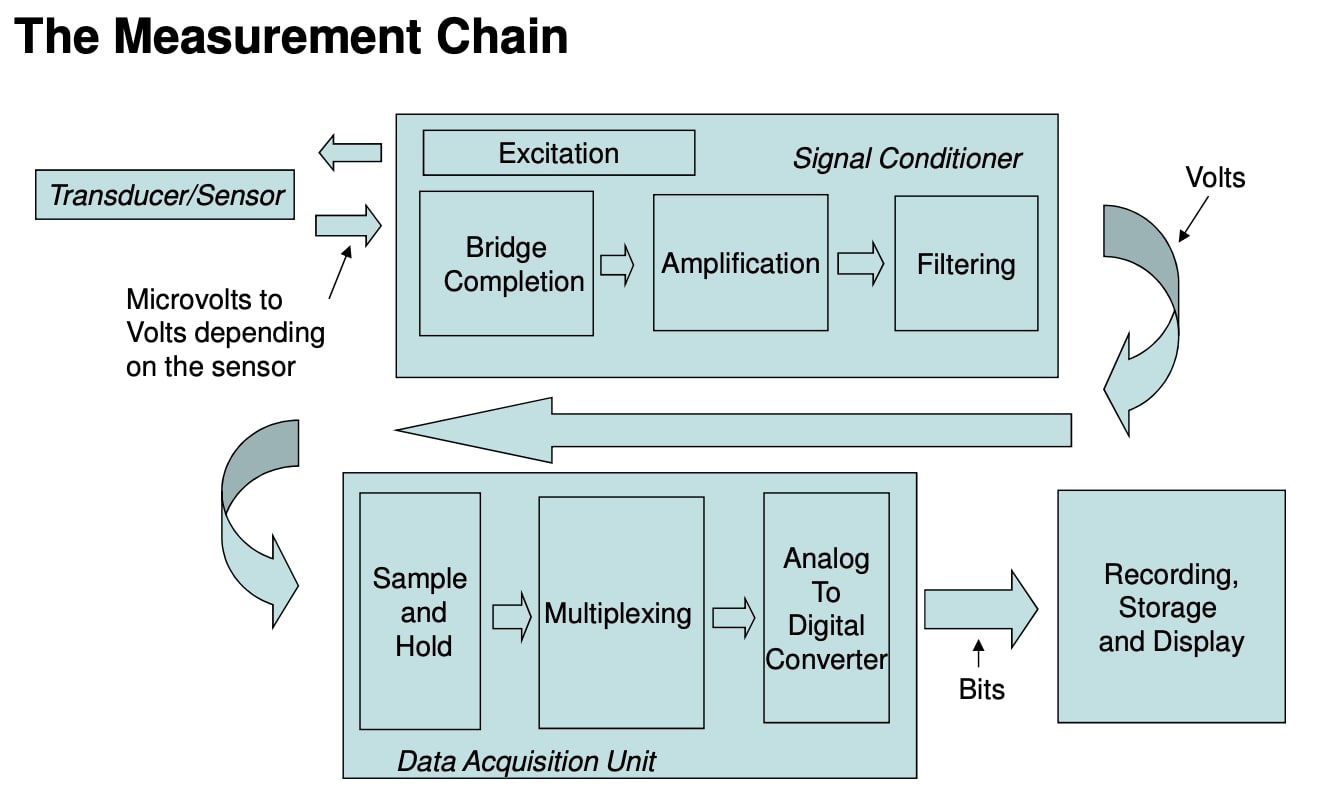

Applied Instrumentation

In this program, I learned more about the design, implementation, deployment, and analysis of the measurement chain. I got exposure to test system instrumentation, particularly in the gas turbine engine industry.

Furthermore, I gained an understanding of how to apply a measurement system design methodology and how to measure system accuracy. I also gained experience using LABVIEW.

As part of the program, I worked on three group projects that involved designing measurement systems to tackle real-life design problems.